Sprint Lab x Ludology: The Anatomy of Games (An AI-enhanced Mini-Sprint)

We are thrilled when curious minds connect and collaborate with us to create something new!

Our most recent adventure in the Sprint Lab came in the form of Simon Huber, lead professor in Game Design at University of Vienna. Simon heard our CEO Barbara Ruehling on a podcast “How Books Are Made,” and his interest was piqued as a researcher with books as a research object. Fascinated by the method, he reached out to design some kind of Book Sprint on a budget. After a few months of brainstorming, we came up with a one-day mini-Sprint – distilling the essence of collaborative knowledge production in a 10-hour session, with some AI assistance.

We ran this one-day mini-Sprint at the beautiful University of Vienna last May 10. The goal of the Sprint was to lay the foundation for a book manuscript, zine, or series of zines that comprehensively tackled the discipline of Ludology – the study of games. The eight authors who joined us were students and scholars who also participated in a Ludological Symposium the day before.

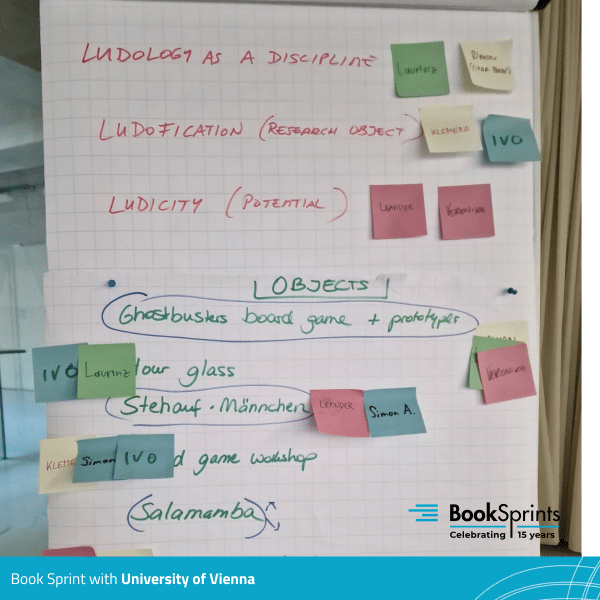

A peek at the authors’ goals for this 10-hour mini-Sprint.

Values-based integration of AI into the Sprint Design

With limited time and resources, our facilitators at Book Sprints designed the Sprint in a way that maximized the time and resources they did have in the 10-hour session – mainly, the rich knowledge base that these ludological scholars possessed in their years of study and research.

The Sprint concept was to use AI as an assistant to the human experts. The human experts would provide the knowledge and insights through conversation and discussion, while AI would support in recording and summarizing these into workable initial drafts for the experts to review, edit, and enhance. There would be no information sourced by AI through the internet or from anywhere else – the prompts would solely focus on recorded information from the human experts.

Furthermore, humans would always be in the loop. The process would start with the human experts, then AI would provide assistance, then back to the humans for checking and editing, then AI assistance for refinement, then back to human checking. This design is aligned with our core values in incorporating AI into our methods: ethical and transparent use, productivity, and accuracy and trust.

In actual practice, the Sprint began with an introduction by the facilitator, Barbara, to what Book Sprints is and establishing collaboratively with the group the goal for that day. This shared goal guided the participants through the activities of the day.

The next step, which was unique to this Sprint, was to use an AI tool to record the conversation of the authors on the content of each chapter. We used otter.ai to generate a relatively accurate transcript of these rich, interesting expert discussions. This transcript was then fed through ChatGPT in a series of small, specific prompts designed pre-Sprint to summarize and structure the content.

Our facilitators spent a few days playing around with different prompts to determine what would be most helpful in our context. The goal was not to create a lot of text or a solid wall of text that would then be a slog for our human authors to review – that wouldn’t be productive. Instead, the prompts were structured in such a way that the text would be reviewable by a human.

The authors were broken up into groups to review specific chapter summaries generated by the AI tools.

Next, similar to the editing rounds of a traditional Sprint, our human authors commented on the summarized text produced by ChatGPT. This is how we brought humans back into the loop to ensure accuracy of the produced text. These comments were then used as prompts back into ChatGPT to further assist in editing and refining the text, through human’s guidance.

The result of this Book Sprint was not quite a full manuscript as initially intended. Instead, our authors have rough drafts of chapters and key points they negotiated collaboratively – a bit of a different kind of starting point for further work on this publication!

Ways Forward

This mini-Sprint proved insightful to both the participants and Book Sprints on the use of AI in assisting human expert knowledge production.

In a short feedback session post-Sprint, participants shared that several had used AI tools before and were quite open to the idea. Others discovered new tools in this Sprint that they had not known about before. They found the transcript incredibly useful – it was a good way to ensure no ideas or insights slip through the cracks of a long, winding discussion. It served as a helpful record to have on-hand after the conversations had concluded.

The transcript was also, however, very long – almost too long to really review and parse through for correctness. This was something that our facilitators noted as well in the initial experiment for the Sprint design; a 30-minute conversation could produce up to 10,000 words worth 25 pages. It would take almost as long, if not longer than the conversation to read it and ensure everything was captured despite factors like proximity to the microphone, use of native language, or even the accents when speaking English.

The authors were not as enthused with the output of the ChatGPT summaries. During the breakout group sessions for editing the ChatGPT summaries, only some groups found the summaries captured the discussion correctly. One group found that the summary as a whole was not what they envisioned at all. Authors also noted that there was a distinct “ChatGPT” language that came through in the style and narrative – a voice that was not theirs nor was it one they wanted to particularly use. The language tended to be a bit too glossy or smooth, making it harder to read in detail to spot the mistakes or corrections needed. The voice sounded too finished and confident in what it was saying. Because of this, authors found it challenging to work with the language and use it as a starting point instead of overhauling all of it.

As a facilitator, Barbara also observed that some instances will still call for analog methods because these work best. For example, one of the tools we tried in this mini-Sprint was a word cloud generated from the transcript of the conversation. This was to mimic one of our usual post-its exercises in traditional Sprints. The goal was to visualize key terms in the word cloud to further guide participants on their shared goal and ideas. However, even after tweaking the word cloud to take out common fillers, the resulting ‘cloud’ still was not as intended. Barbara quickly pivoted then to using post-its again, even though not initially planned for, to clarify ideas and discussion points. As a tried and true method, this was effective in strengthening alignment and collaboration between the participants.

The tried and true formula of post-its on the wall + the right facilitation still never fails!

Ultimately, this was a successful mini-Sprint that met the goal of collaboratively producing initial material for a larger group publication on Ludology, even if it was not a full manuscript. The mini-Sprint was also a successful AI experiment, in that we have developed more insight into the usefulness of certain AI tools. We have identified tweaks for our next run with these tools, such as breaking down ChatGPT prompts into even smaller work steps to ensure accuracy to the transcript conversation. We are also exploring prompts that lean more towards key phrases and bullet points instead of a full cohesive paragraph, which would allow human experts more easily to review, organize, and edit as needed.

We’re excited for what lies ahead for the Ludology scholars’ exploration into The Anatomy of Games! Follow us on our Book Sprints socials for updates.

Keep an eye out for more Sprint updates in the future! Never miss an update with us by following us at the links below.

—

Got a great idea? Tell the world with us through a Book Sprint.

Send us a message on IG, LinkedIn, or at contact@booksprints.net